Doctor of Philosophy (Ph.D.)

Department of Computer Science and AutomationIndian Institute of Science, Bangalore

2016-2021

Advisor: Prof. Partha Talukdar

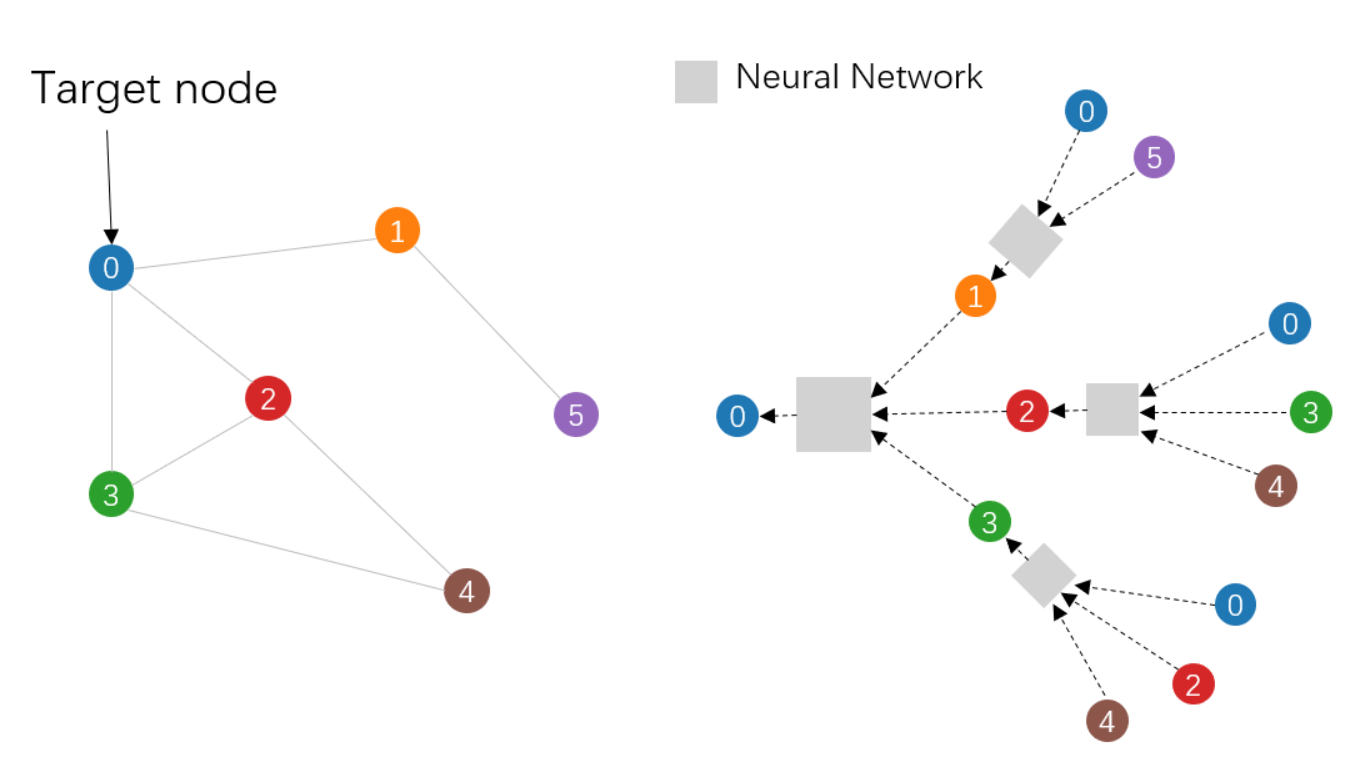

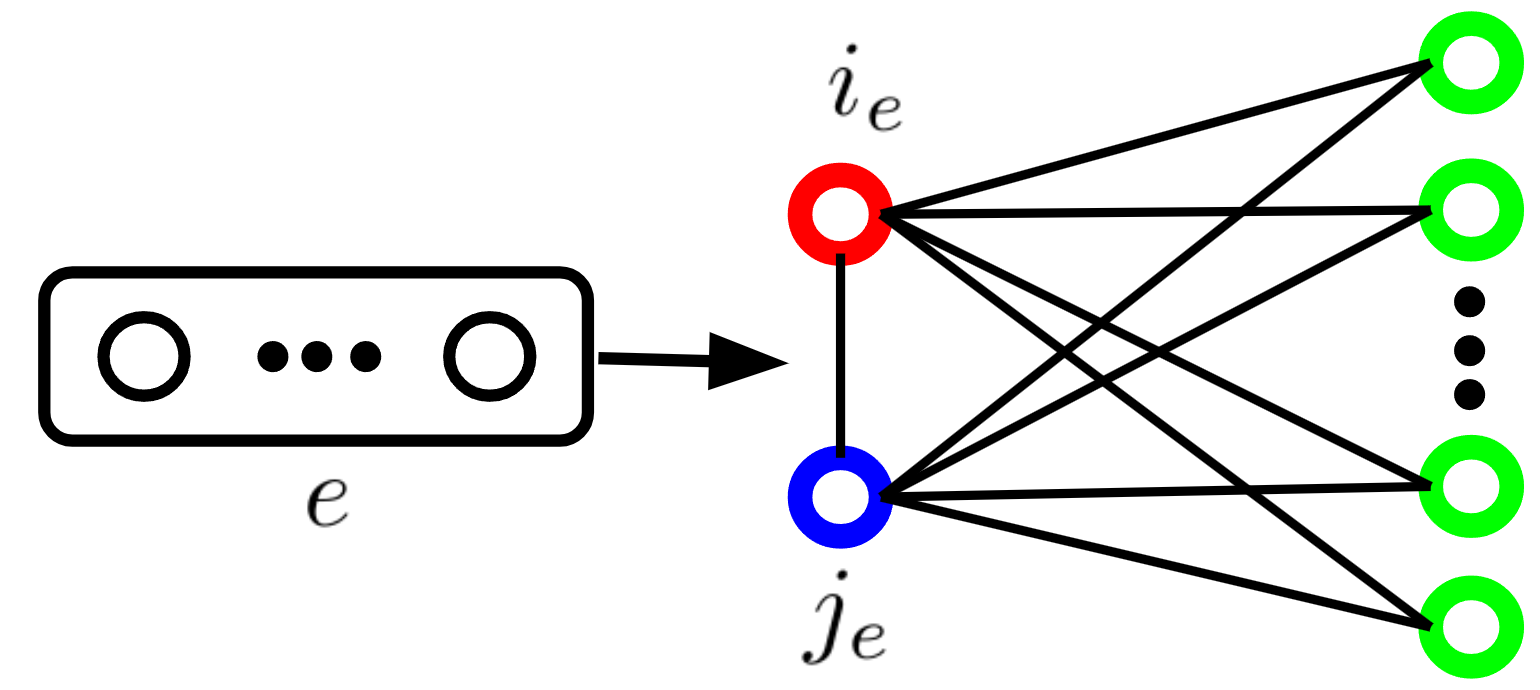

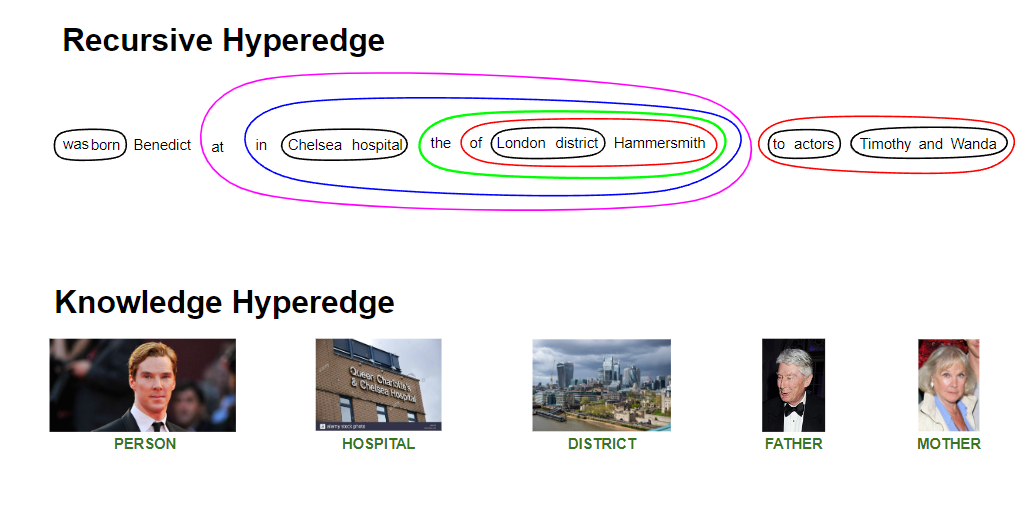

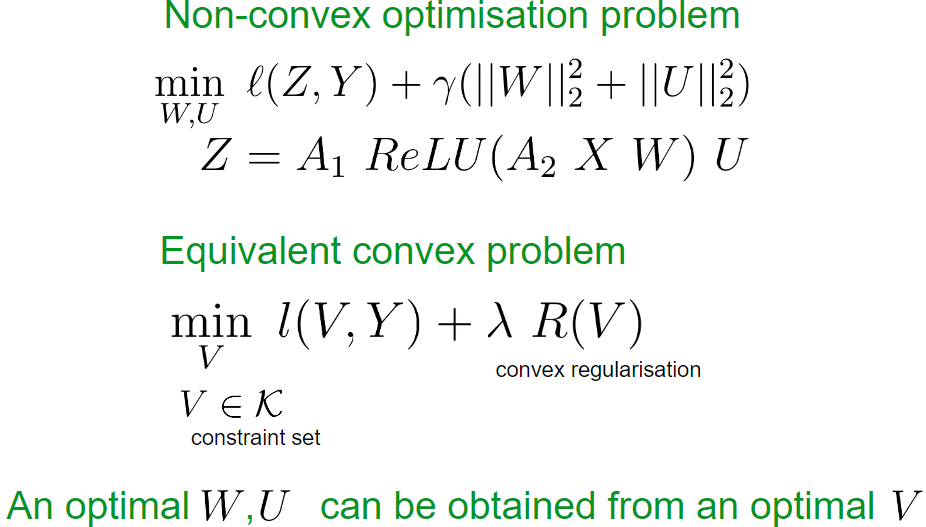

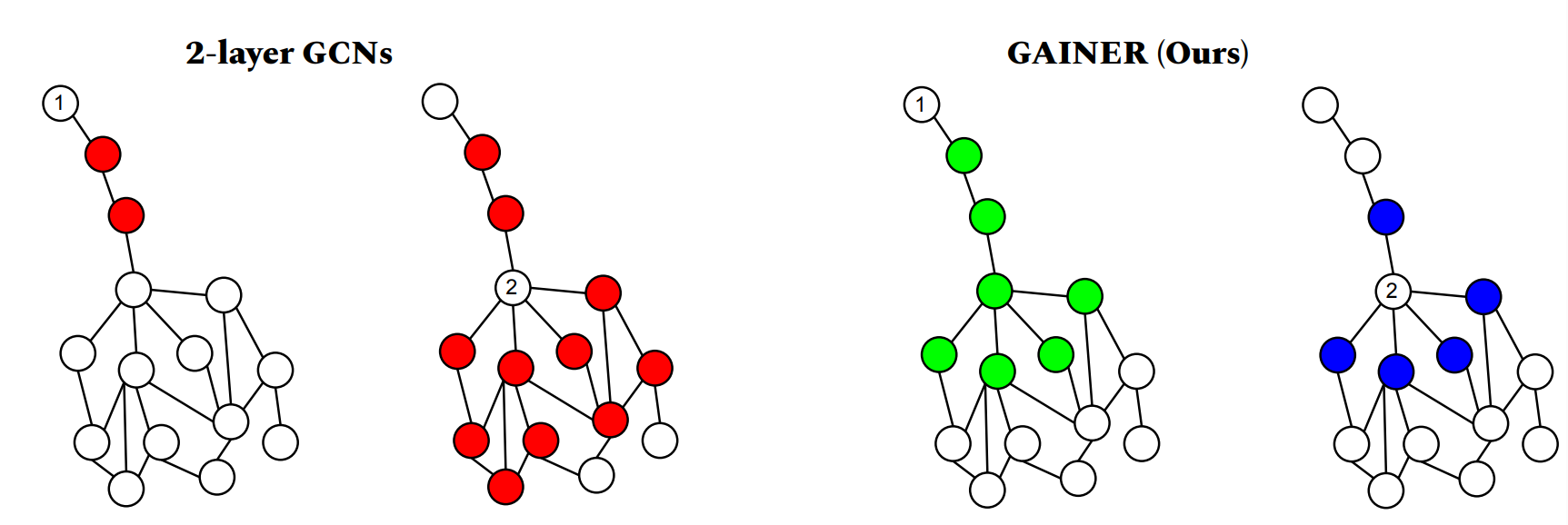

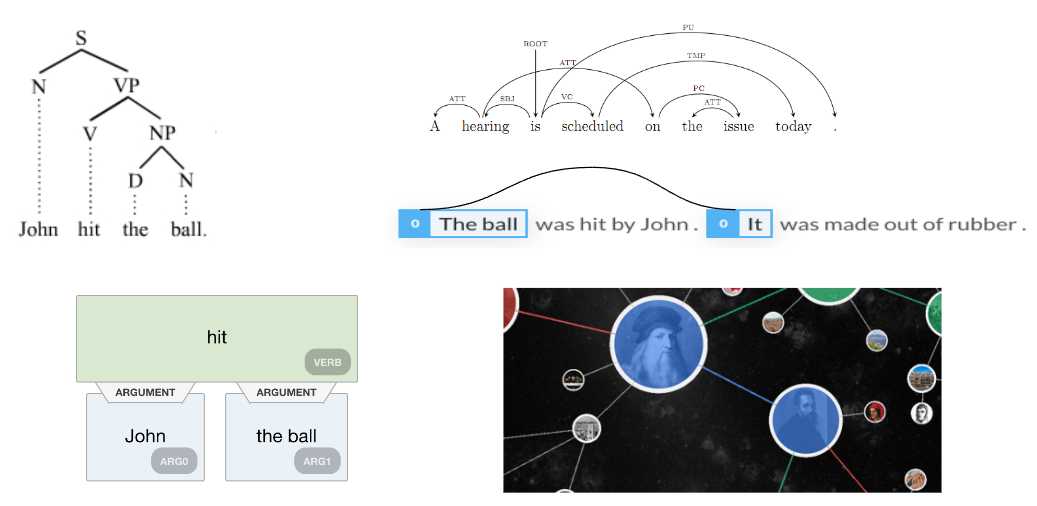

Thesis: Deep Learning over Hypergraphs

Master of Technology (M.Tech.)

International Institute of Information Technology, Bangalore2014-2016

Advisor: Prof. Ashish Choudhury